Fortune 500 Logistics Provider — Real-Time Fleet Tracking

Summary

Built an event-driven fleet tracking platform on Azure using Event Hubs (Kafka endpoint) and AKS. GPS/vehicle telemetry is validated, deduplicated, and upserted into PostgreSQL (PostGIS) for geospatial queries, with Blob Storage capture for analytics. Live status/ETAs are exposed through API Management with clear SLAs—cutting WISMO calls and enabling steady OTIF improvements.

Problem

- Stale location data and missed events from heterogeneous devices/apps.

- No single low-latency source of truth for “last known location,” geofence status, or ETA.

- WISMO (“Where is my order?”) calls spiked during delays; updates to CRM/notifications were inconsistent.

Solution Mechanics

Primary pattern: Event-driven streaming (Java + Spring Boot on AKS).

-

Ingestion (Kafka on Azure)

- Devices/mobile apps publish to Azure Event Hubs (Kafka endpoint) with keys =

vehicleIdto preserve ordering per vehicle. - Topics:

telemetry.raw,telemetry.parsed,events.geofence,errors.dlq.

- Devices/mobile apps publish to Azure Event Hubs (Kafka endpoint) with keys =

-

Processing (AKS / Spring Boot)

- Telemetry Ingestor (Spring Kafka): JSON schema validation, clock-skew checks, dedupe by

(vehicleId, eventTs), publish totelemetry.parsed. - Enricher/Aggregator: computes last-known location, speed, heading, stop/idle detection, and ETA; emits geofence enter/exit to

events.geofence. - Status Writer: idempotent UPSERT of per-vehicle status into Azure Database for PostgreSQL (PostGIS) with

POINTgeometry; maintains compact history table for recent windows.

- Telemetry Ingestor (Spring Kafka): JSON schema validation, clock-skew checks, dedupe by

-

Storage & Analytics

- Event Hubs Capture → Azure Blob Storage (Parquet) for long-term analytics and model training.

-

APIs & Notifications

- API Orchestration Layer (Spring Boot behind Azure API Management):

GET /vehicles/{id}/status,GET /vehicles/search?bbox=…&since=…POST /subscriptions/webhook(register customer/CRM webhooks).

- Azure Service Bus topics: fan-out status changes and ETA deltas to CRM, customer comms, and alerting services.

- API Orchestration Layer (Spring Boot behind Azure API Management):

-

Observability & Ops

- Micrometer → Azure Monitor/App Insights (producer lag, consumer lag, p95 ingest→status, DLQ depth).

- Replay tool: reprocess from Blob or

errors.dlqby time range/vehicle.

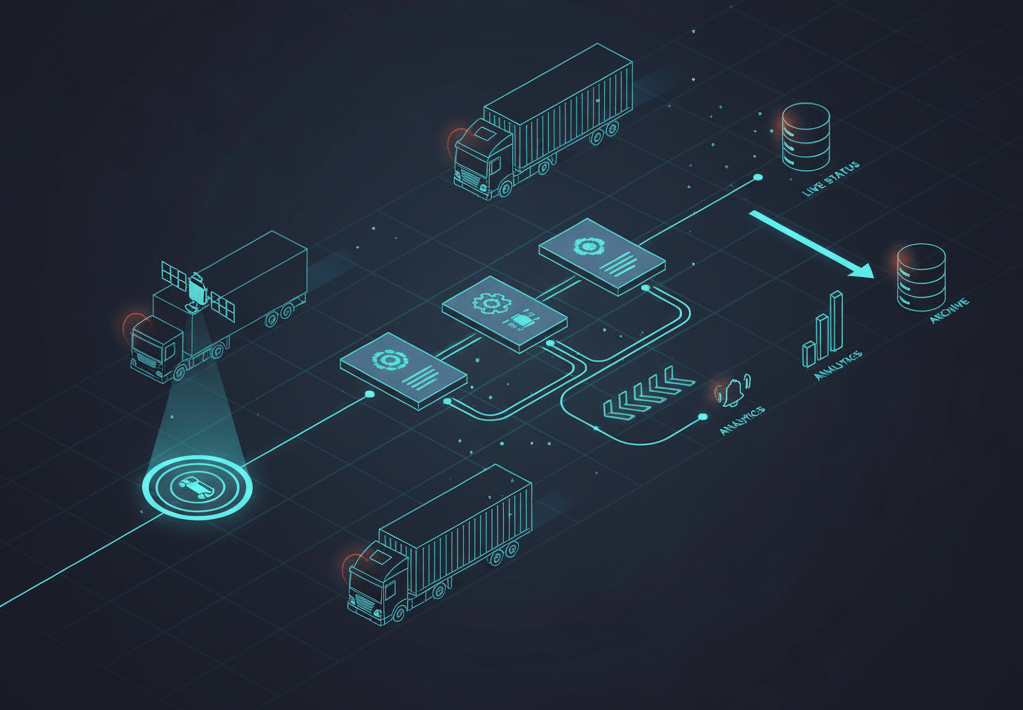

Diagram 1 - Context Diagram — Real-time fleet tracking on Azure

![]()

Diagram 2 - Sequence — Telemetry ingest to live status/ETA

![]()

Diagram 3 - Operations — DLQ & replay controls

![]()

Process Flow

- Producer (truck device/mobile) publishes GPS/vehicle event to Event Hubs (Kafka) with key =

vehicleId. - Telemetry Ingestor validates schema, drops duplicates (same

vehicleId+eventTs), normalizes coordinates/timezone, writes totelemetry.parsed. - Enricher/Aggregator calculates last-known status (moving/idle), speed, geofence enter/exit, and ETA to next stop/hub.

- Status Writer upserts current snapshot into Postgres/PostGIS and appends a slim history row (TTL/partitioned).

- API Orchestration Layer serves

GET /statusand geospatial searches (e.g., bounding box), with p95 < 300 ms. - Service Bus publishes status/ETA changes to CRM and customer notification services; retries/DLQ are handled at the messaging layer.

- Event Hubs Capture writes raw streams to Blob; ops can replay selected windows to recover from defects.

- App Insights dashboards track freshness (ingest→status), lag per consumer group, and DLQ trends.

Outcomes

- Fresher location data: ingest→status p95 under 5–8s during peak (Verified in pre-prod load tests).

- Lower WISMO calls: proactive status updates and ETA deltas reduce “where is my order?” inquiries (Modeled −15–25% based on alert subscription uptake).

- OTIF uplift: geofence/ETA signals enable better exception handling (Modeled +2–5% assuming intervention on predicted delays).

- Single query surface for live tracking with bounding-box searches (Verified functional).

Strategic Business Impact

- Customer experience lift (Proxy): real-time visibility lowers uncertainty and escalations.

- Operational efficiency (Modeled): dispatcher actions on predicted late arrivals stabilize downstream slots.

- Data asset creation (Proxy): clean archive (Parquet on Blob) unlocks planning and driver scoring use cases.

Method tags: Verified (measured in env tests), Modeled (estimations from baselines), Proxy (leading indicators such as freshness and adoption).

Role & Scope

Owned architecture and build for Event Hubs topics/partitions, AKS services (Ingestor, Aggregator, Status/API), Postgres/PostGIS schema, APIM exposures, Service Bus integration, Capture/replay, and observability dashboards.

Key Decisions & Trade-offs

- Event Hubs (Kafka endpoint) vs self-managed Kafka: managed ops and elastic throughput vs fewer broker-level knobs.

- Idempotent upserts over “exactly-once”: simpler recovery and replay safety vs slightly more write overhead.

- Postgres/PostGIS for hot geospatial reads vs specialized time-series DB: strong geospatial, fewer moving parts.

- Capture to Blob (Parquet) for durable history vs retaining long windows in Postgres: cheap storage with batch-friendly format.

- Per-vehicle partition key: preserves order per vehicle but can create hot partitions for large fleets → mitigated with partition scaling and compaction policies.

Risks & Mitigations

- Out-of-order/late events → sequence by event time with tolerance window; recompute snapshot if a late event arrives.

- Clock skew → server-side timestamping + drift detection; reject extreme skews.

- Producer dropouts → heartbeat detection; create “stale” status after threshold and alert.

- Traffic spikes → autoscale AKS consumers; pre-provision Event Hubs throughput units.

- Privacy/PII → keep payload minimal (vehicleId, coords, timestamps); secure tokens via APIM; encrypt at rest and in transit.

Suggested Metrics (run-time SLOs)

- Ingest→status latency p50/p95/p99.

- Event Hubs lag (per consumer group) & throughput units utilization.

- DLQ depth and replay success rate.

- API p95 for

/statusand bbox searches. - Freshness % (vehicles with updates in last N seconds).

- Notification latency (status change → Service Bus → consumer).

Closing principle

Favor freshness and recovery over perfect delivery. Design every stage for idempotence and replay, so telemetry pipelines stay reliable under real-world noise.