Tier 1 US Telecom — OSS Data Validation & Remediation Framework

Summary

Built a layered validation and remediation platform around a Global Telecom Provider’s OSS graph (600M+ nodes, 1.2B+ relationships). The framework combines Neo4j constraints, a Drools rules engine, CDC-based change capture, and an audited invalid store, so new and updated records are continuously checked, auto-remediated when possible, and routed for follow-up when not.

Problem

Inventory/topology was ingested from 25+ diverse systems with uneven quality. Invalids (missing keys, bad types, stale timestamps), duplicates, and schema drift eroded trust in the graph, triggered rollbacks, and increased downstream rework in assurance and CMDB replication. Teams needed always-on guardrails—not one-off data fixes.

Solution Mechanics

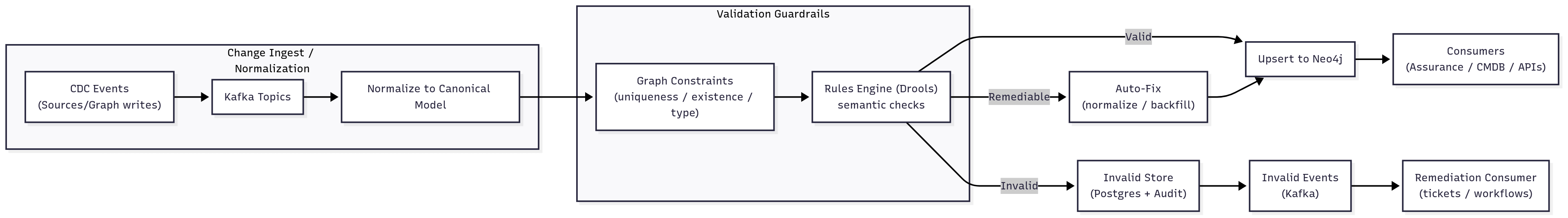

- Layered validation

- Graph constraints (uniqueness, existence, type, node keys) to stop obvious defects at write time.

- Rules engine (Drools) to enforce nuanced business/semantic checks on nodes and relationships.

- Classification → Actions

- Valid → upsert to graph.

- Remediable → auto-fix (derive keys, normalize codes, backfill timestamps) then upsert.

- Invalid → persist to relational store with full failure context for audit and manual resolution.

- CDC-driven loop

- Change capture on new/updated entities; stream through Kafka into the validator.

- Invalids also emit CDC events so a remediation consumer can trigger workflows or tickets.

- Governance & observability

- Versioned rule templates; run metadata and reports (throughput, invalid rate, remediation rate).

- Roadmap: dashboard for runs/history, and business-friendly rule management UI.

Diagram 1 — Validation & Remediation System

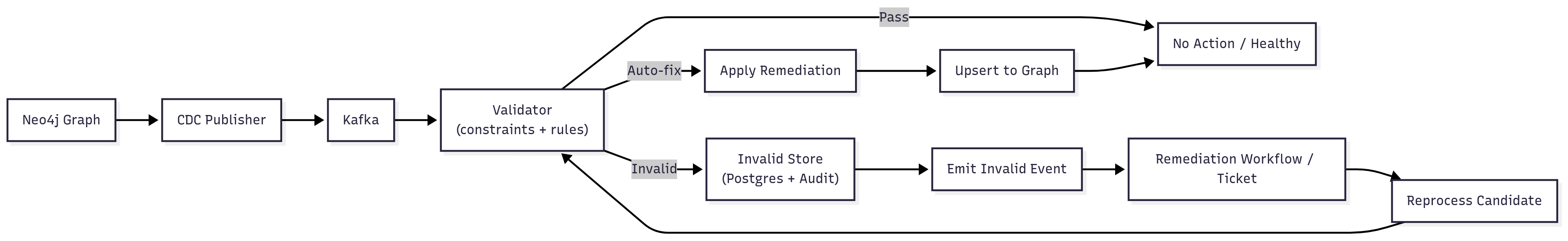

Diagram 2 — CDC Change Capture & Remediation Loop

Process Flow

- CDC detects create/update events from source loaders or the graph write path.

- Events land in Kafka; entities are normalized to the canonical model.

- Graph constraints (uniqueness/existence/type) block obvious defects.

- Entities pass through the Drools validation rules (semantic checks).

- Engine classifies each record: valid / remediable / invalid.

- Valid → upsert to Neo4j; Remediable → auto-fix then upsert.

- Invalid → persist to Postgres (audited), emit an event; consumer triggers remediation or tickets.

- Reports capture counts, invalid reasons, remediation outcomes, and trend lines for governance.

Outcomes

- Defect leakage reduced: fewer bad records propagate to assurance/CMDB.

- Rollback & rework down: constraints + rules catch issues before consumers see them.

- Trust restored: engineers and NOC teams rely on a continuously validated graph.

- Auditability: every invalid is recorded with why, when, and what fixed it (or who needs to).

Strategic Business Impact

-

Rollback avoidance & release stability (Modeled; Medium confidence)

- Fewer post-deployment rollbacks due to data defects; modeled from baseline invalid rates × cost per rollback × avoided incidents.

-

Ops efficiency (Proxy)

- Auto-remediation + clear failure reasons reduce manual data clean-up and unplanned tickets.

-

Assurance quality (Proxy)

- Higher integrity inputs → more reliable impact analysis and fewer false alarms.

Method tags: Rollback avoidance = Modeled; Ops efficiency & assurance quality = Proxy.

Evidence hooks: invalid rate trend, remediation rate, constraint violations, rollback counts.

Role & Scope

- Led the end-to-end architecture for constraints, rules, CDC integration, invalid store, and reporting.

- Defined the validation taxonomy, rule versioning/governance, and the classification → action paths.

- Aligned ingestion, graph, CMDB, and assurance teams on contracts and SLAs.

Key Decisions & Trade-offs

- Constraints + Rules: constraints stop structural errors early; rules capture semantics that constraints can’t.

- Pre-write vs Post-write: lightweight constraint checks on write; richer rules run in stream with idempotent upserts.

- Externalized rules: JSON/DSL rules outside app code speed iteration; required versioning and test gates.

- Invalid store: relational DB for auditability & ad-hoc analytics; emits CDC to trigger remediation.

- CDC over batch: continuous protection and shorter feedback loops; required rigorous idempotency, DLQs, and backpressure controls.

Risks & Mitigations

- False positives / rule drift → staged rule rollout, shadow mode, versioned templates, and unit tests over synthetic/real samples.

- Write contention / hot partitions → batched Cypher writes, relationship uniqueness, partitioned topics, and adaptive batch sizing.

- Surge in invalids → DLQ isolation, rate-limited remediation, and backlog dashboards.

- Model evolution → schema registry for canonical fields; connector contract tests.

Suggested Metrics (run-time SLOs)

- Invalid rate (by entity/type), top invalid reasons, remediation success rate.

- Constraint violation rate and retries.

- CDC lag p95, DLQ depth & recovery time.

- Traversal/consumer freshness after remediation (time-to-healthy).

- Rollback count and time lost to data defects (trend).

Principle carried forward:

Treat data quality as a continuous control, not a one-time cleanup—combine schema constraints, semantic rules, CDC, and audited invalid handling to keep large graphs trustworthy.